We propose FastDiT-3D, a novel masked diffusion transformer tailored for efficient 3D point cloud generation, which greatly reduces training costs.

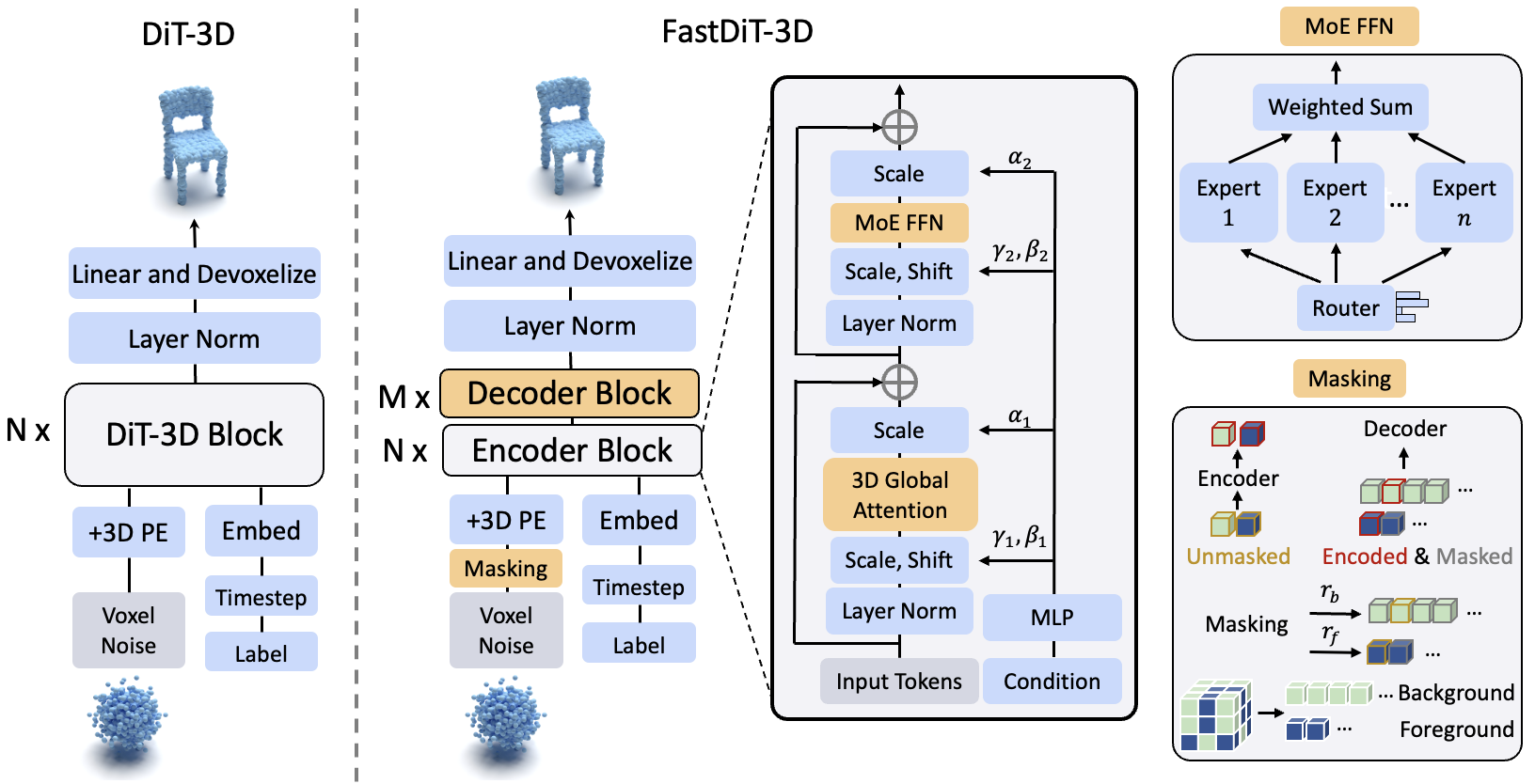

Diffusion Transformers have recently shown remarkable effectiveness in generating high-quality 3D point clouds. However, training voxel-based diffusion models for high-resolution 3D voxels remains prohibitively expensive due to the cubic complexity of attention operators, which arises from the additional dimension of voxels. Motivated by the inherent redundancy of 3D compared to 2D, we propose FastDiT-3D, a novel masked diffusion transformer tailored for efficient 3D point cloud generation, which greatly reduces training costs. Specifically, we draw inspiration from masked autoencoders to dynamically operate the denoising process on masked voxelized point clouds. We also propose a novel voxel-aware masking strategy to adaptively aggregate background/foreground information from voxelized point clouds. Our method achieves state-of-the-art performance with an extreme masking ratio of nearly 99%. Moreover, to improve multi-category 3D generation, we introduce Mixture-of-Expert (MoE) in 3D diffusion model. Each category can learn a distinct diffusion path with different experts, relieving gradient conflict. Experimental results on the ShapeNet dataset demonstrate that our method achieves state-of-the-art high-fidelity and diverse 3D point cloud generation performance. Our FastDiT-3D improves 1-Nearest Neighbor Accuracy and Coverage metrics when generating 128-resolution voxel point clouds, using only 6.5% of the original training cost.

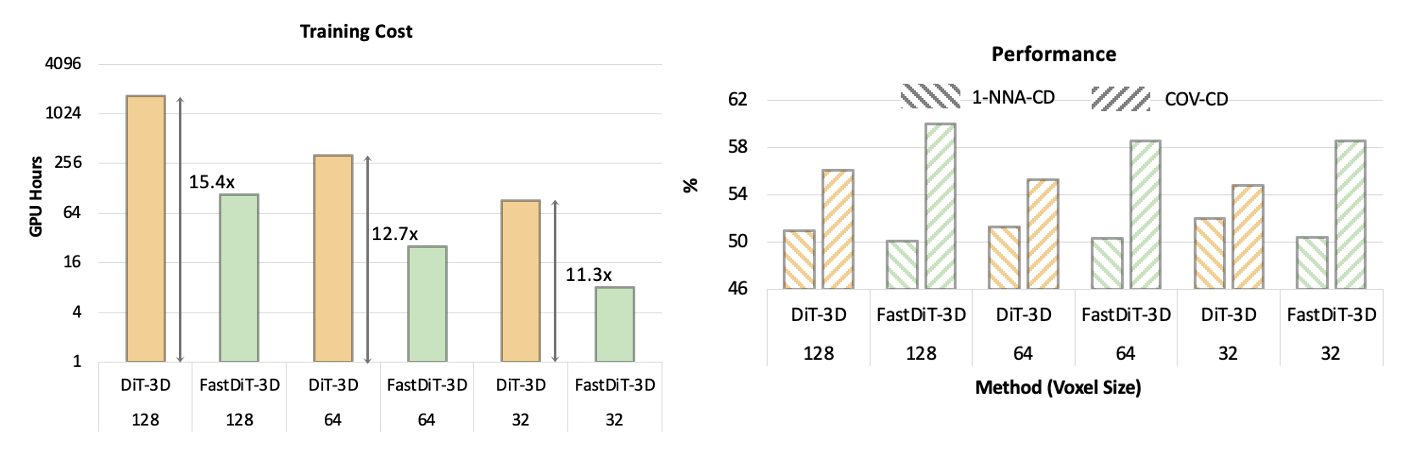

Comparison of the proposed FastDiT-3D with DiT-3D in terms of different voxel sizes on training costs (lower is better) and COV-CD performance (higher is better).

Our method achieves faster training while exhibiting superior performance.

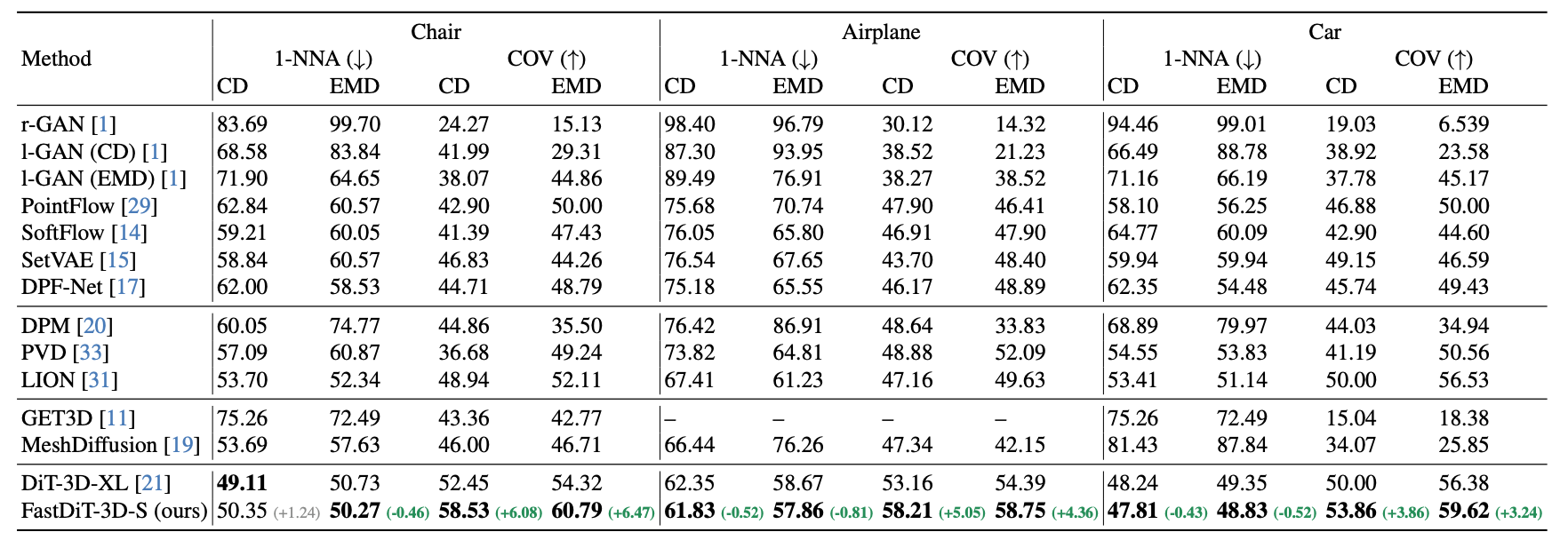

Comparison results (%) on shape metrics of our FastDiT-3D and state-of-the-art models. Our method significantly outperforms previous baselines in terms of all classes.

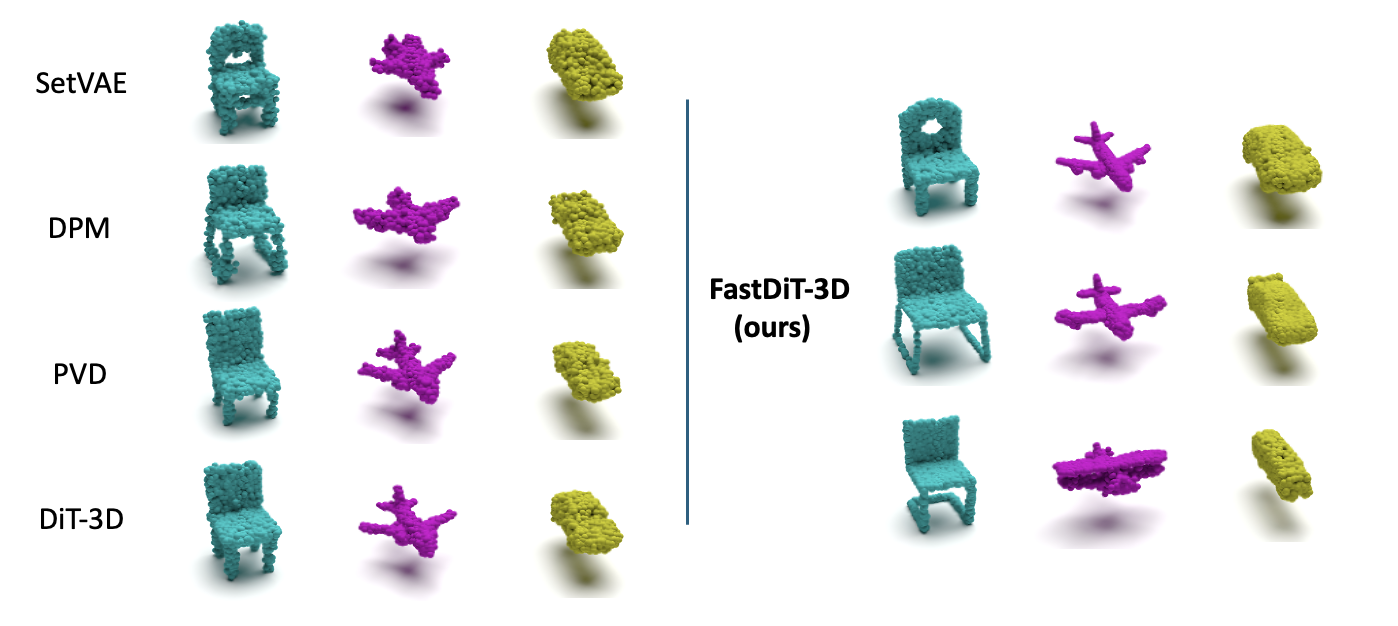

Qualitative comparisons with state-of-the-art methods for high-fidelity and diverse 3D point cloud generation. Our pro- posed FastDiT-3D produces better results for each category.

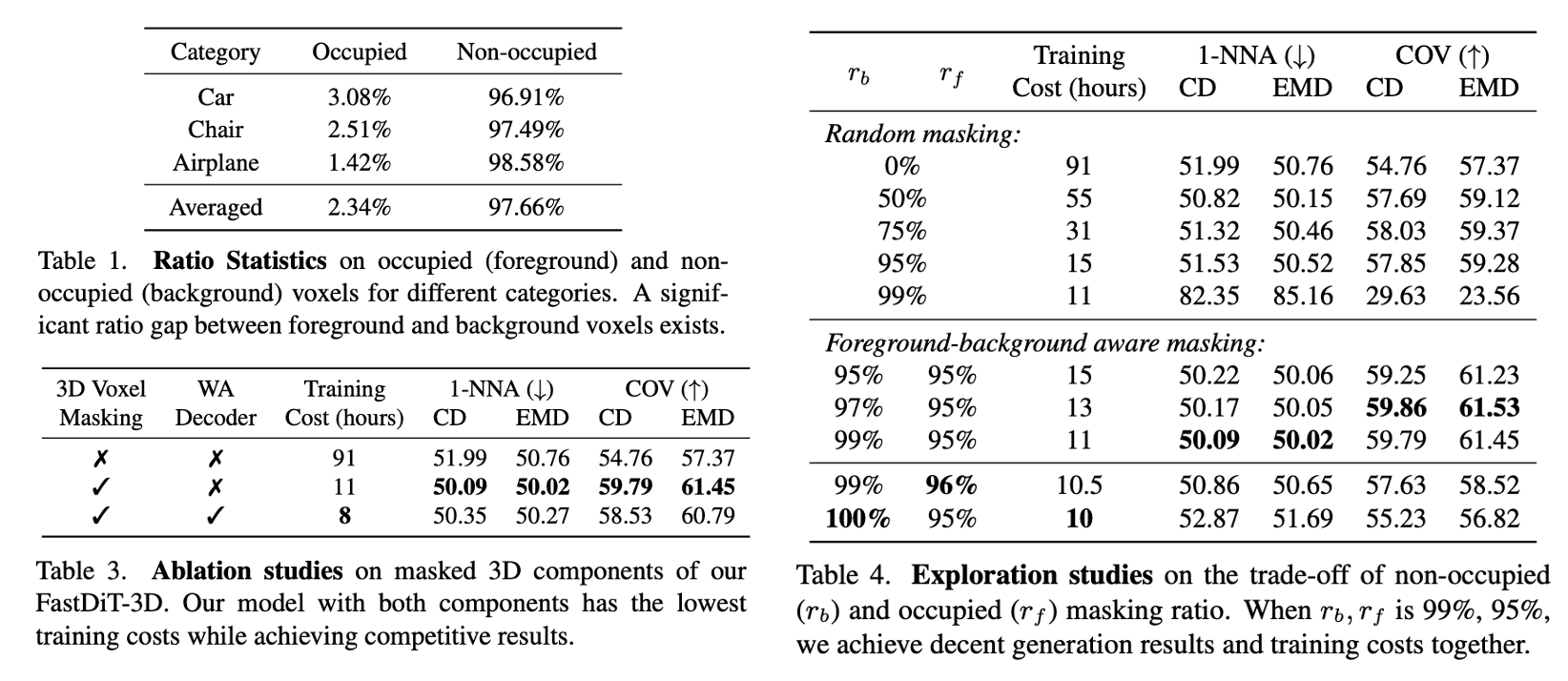

Analyses on ratio statistics, designed 3D components, and trade-off of non-occupied/occupied ratio.

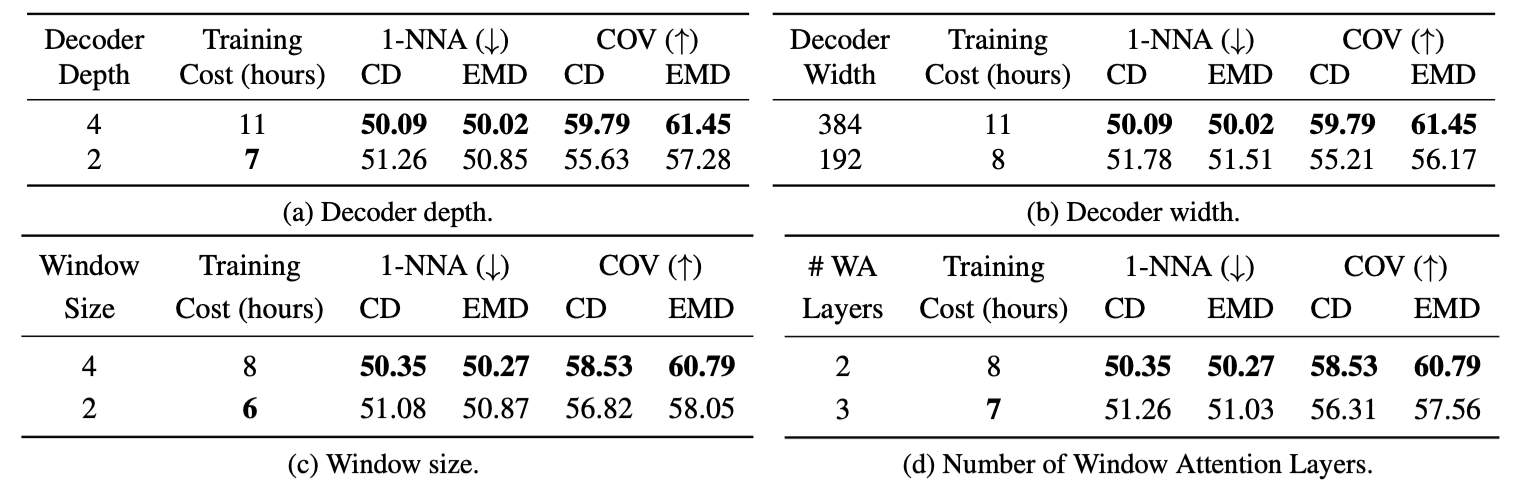

Ablation studies on decoder depth, width, window sizes, and the number of window attention layers.

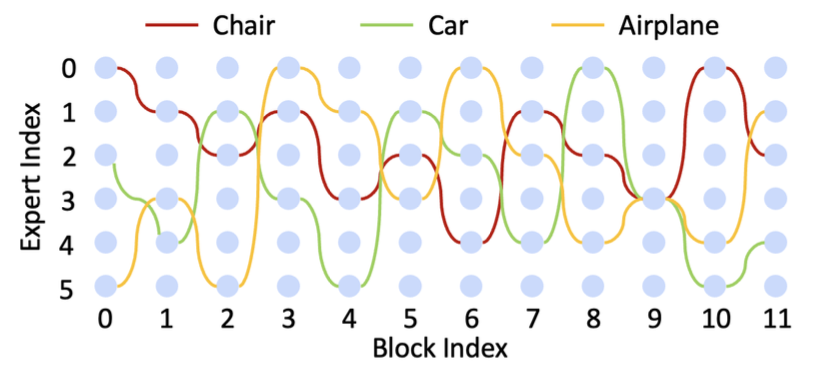

Qualitative visualizations of sampling paths across experts in Mixture-of-Experts encoder blocks for multi-class generation.

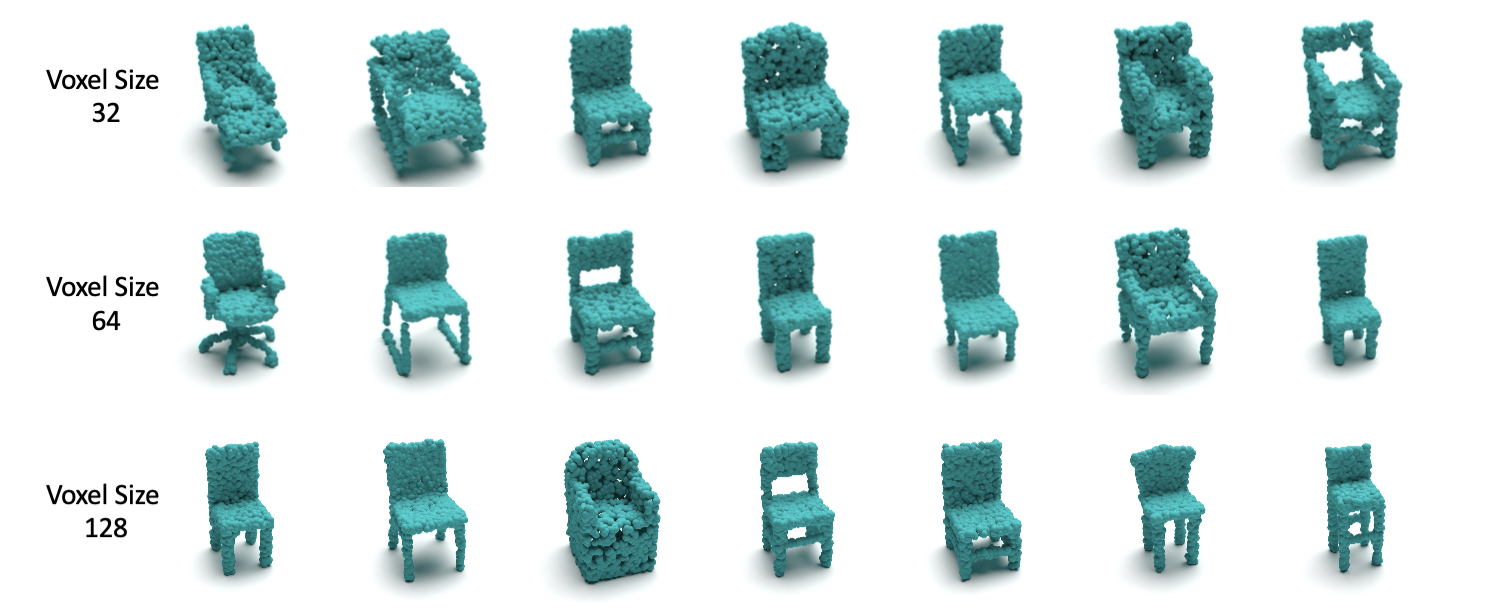

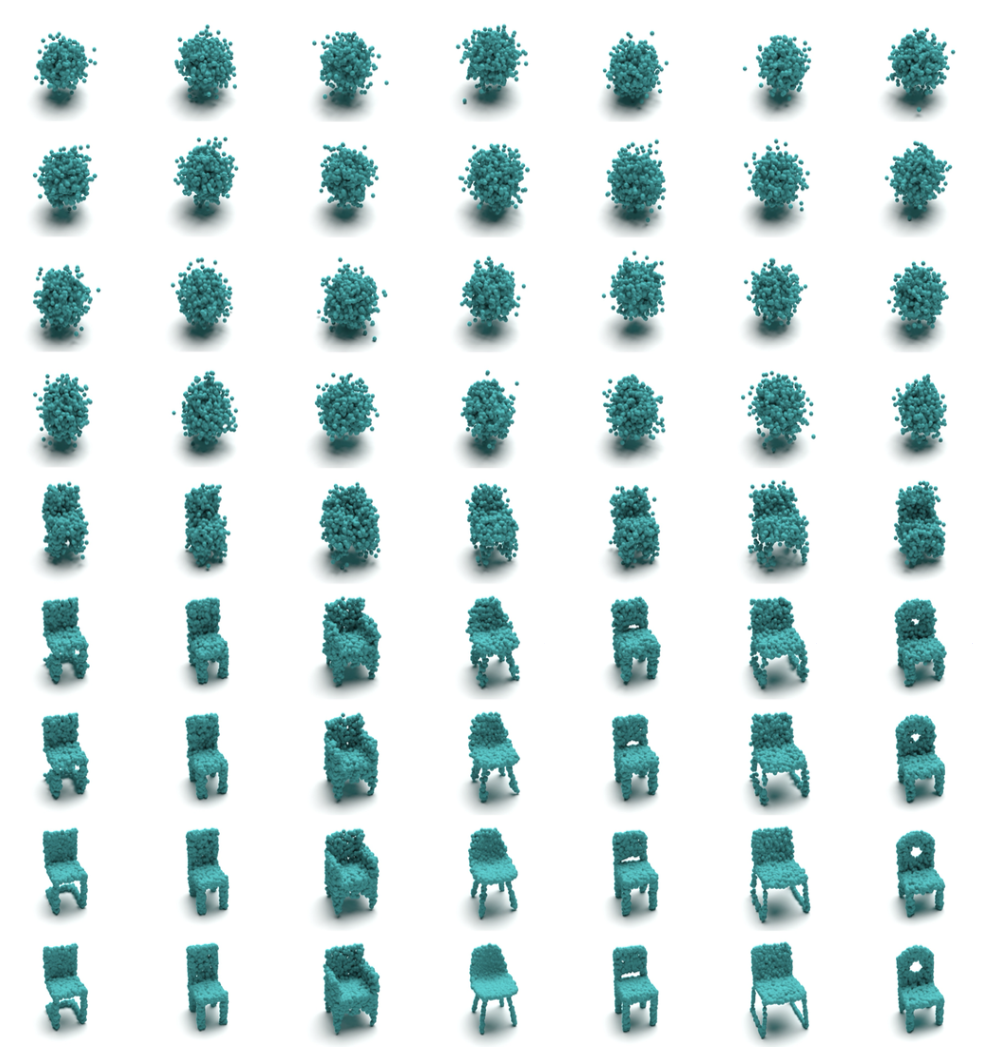

Qualitative visualizations of generated point clouds on chair category for various voxel sizes.

Qualitative visualizations of diffusion process for chair generation. The generation results from random noise to the final 3D shapes are shown in top-to-bottom order in each column.

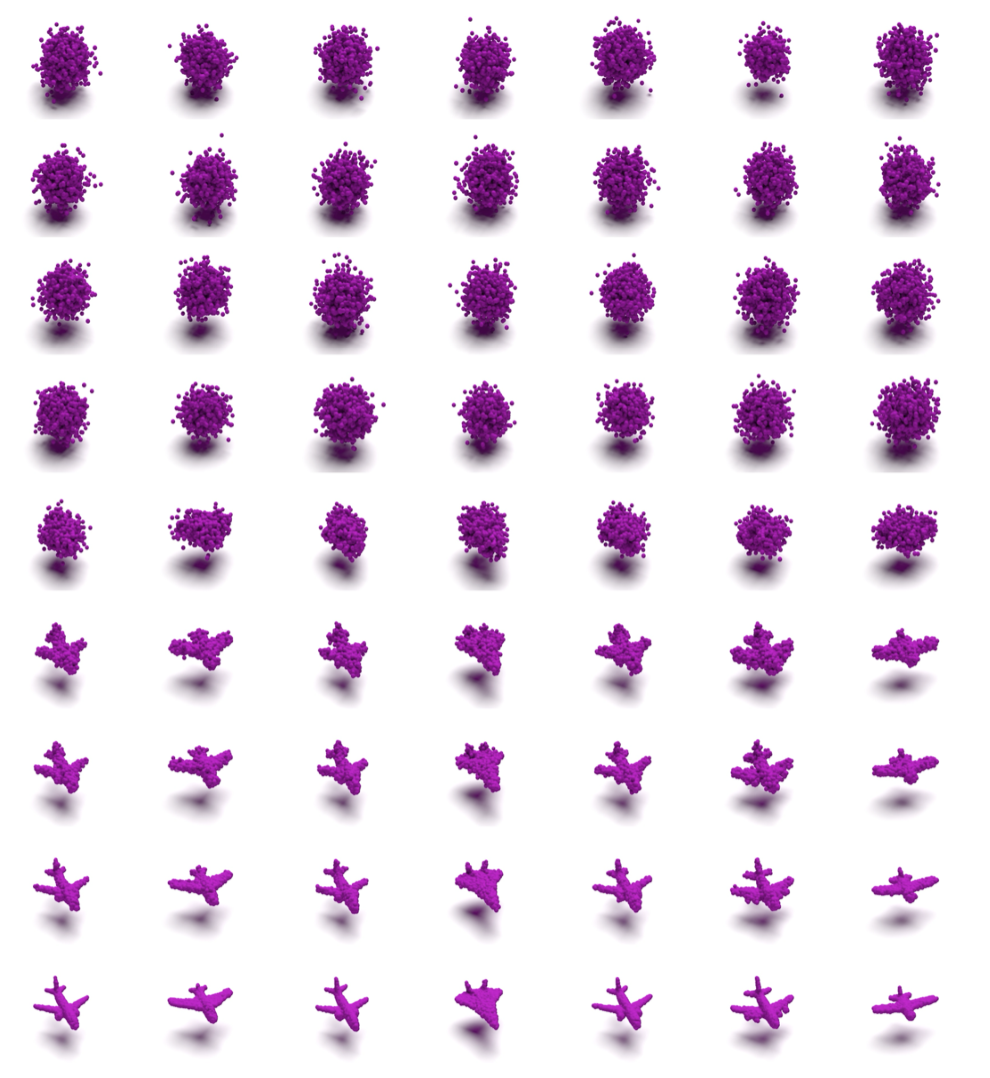

Qualitative visualizations of diffusion process for airplane generation.

Qualitative visualizations of diffusion process for car generation.

@article{mo2023fastdit3d,

title = {Fast Training of Diffusion Transformer with Extreme Masking for 3D Point Clouds Generation},

author = {Shentong Mo and Enze Xie and Yue Wu and Junsong Chen and Matthias Nießner and Zhenguo Li},

journal = {arXiv preprint arXiv: 2312.07231},

year = {2023},

}