DiT-3D: Exploring Plain Diffusion Transformers for 3D Shape Generation

Abstract

Recent Diffusion Transformers (e.g., DiT) have demonstrated their powerful effectiveness in generating high-quality 2D images. However, it is unclear how the Transformer architecture performs equally well in 3D shape generation, as previous 3D diffusion methods mostly adopted the U-Net architecture. To bridge this gap, we propose a novel Diffusion Transformer for 3D shape generation, named DiT-3D, which can directly operate the denoising process on voxelized point clouds using plain Transformers. Compared to existing U-Net approaches, our DiT-3D is more scalable in model size and produces much higher quality generations. Specifically, the DiT-3D adopts the design philosophy of DiT but modifies it by incorporating 3D positional and patch embeddings to aggregate input from voxelized point clouds. To reduce the computational cost of self-attention in 3D shape generation, we incorporate 3D window attention into Transformer blocks, as the increased 3D token length resulting from the additional dimension of voxels can lead to high computation. Finally, linear and devoxelization layers are used to predict the denoised point clouds. In addition, we empirically observe that the pre-trained DiT-2D checkpoint on ImageNet can significantly improve DiT-3D on ShapeNet. Experimental results on the ShapeNet dataset demonstrate that the proposed DiT-3D achieves state-of-the-art performance in high-fidelity and diverse 3D point cloud generation.

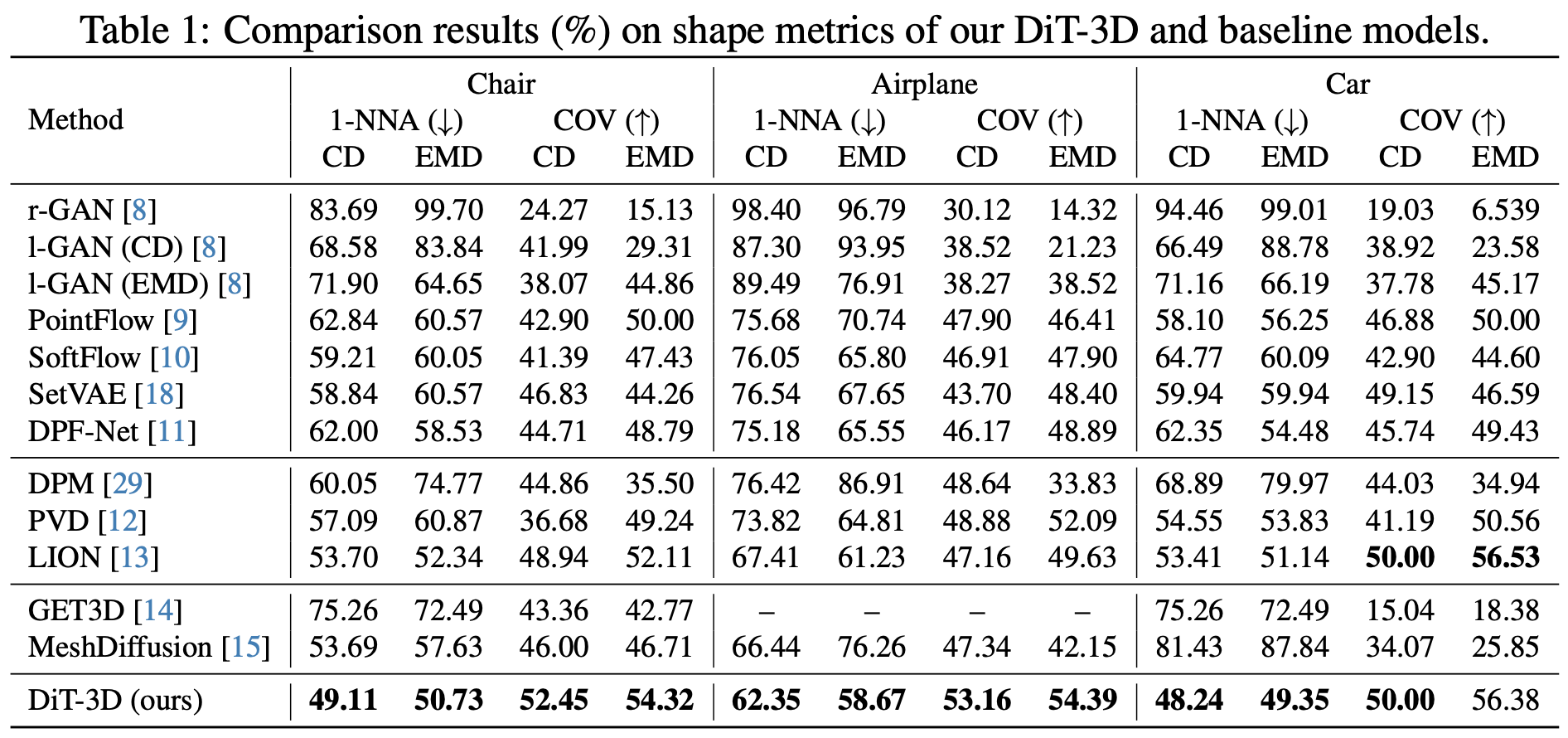

Experiments

We achieve the best performance in terms of all metrics, compared to previous non-DDPM and DDPM baselines.

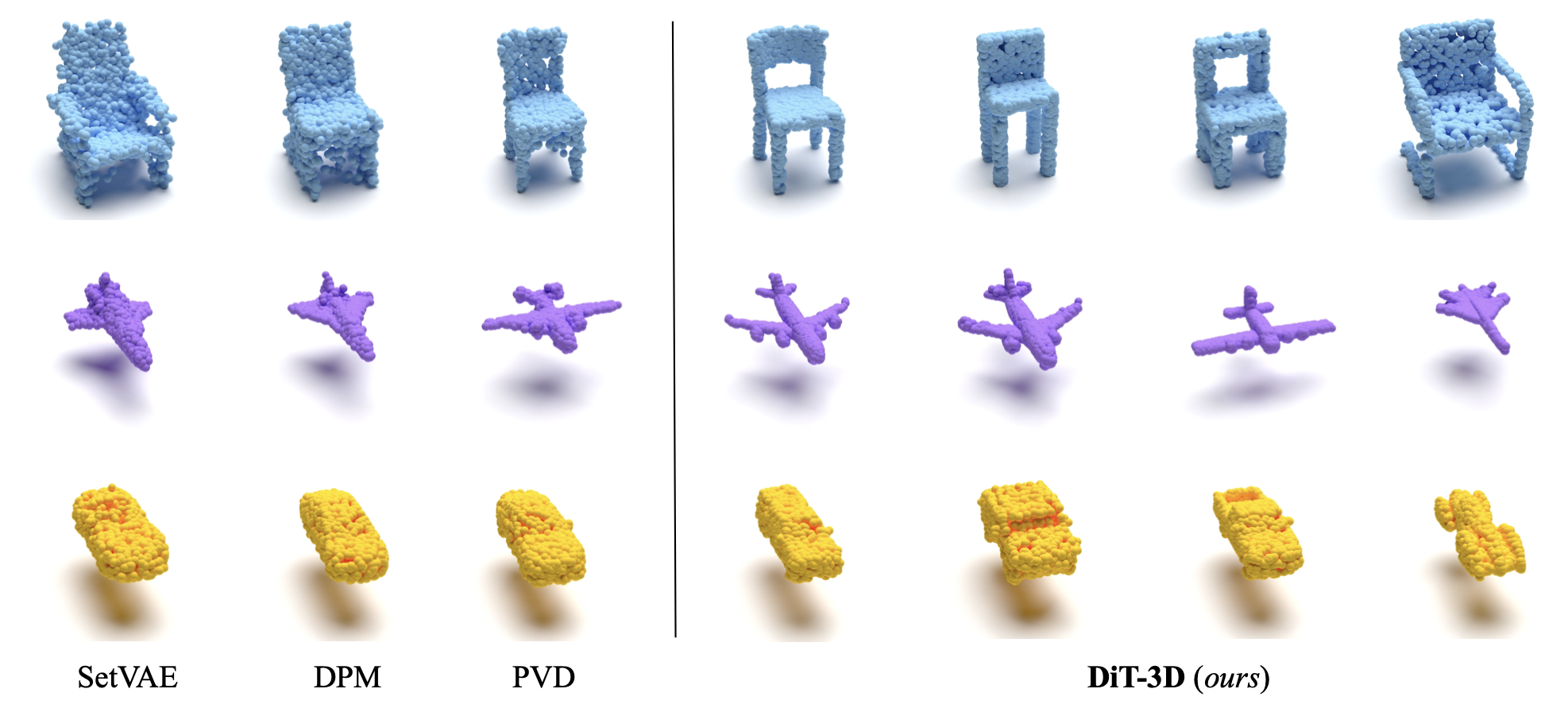

Qualitative comparisons with state-of-the-art works. The proposed DiT-3D generates high-fidelity and diverse point clouds of 3D shapes for each category.

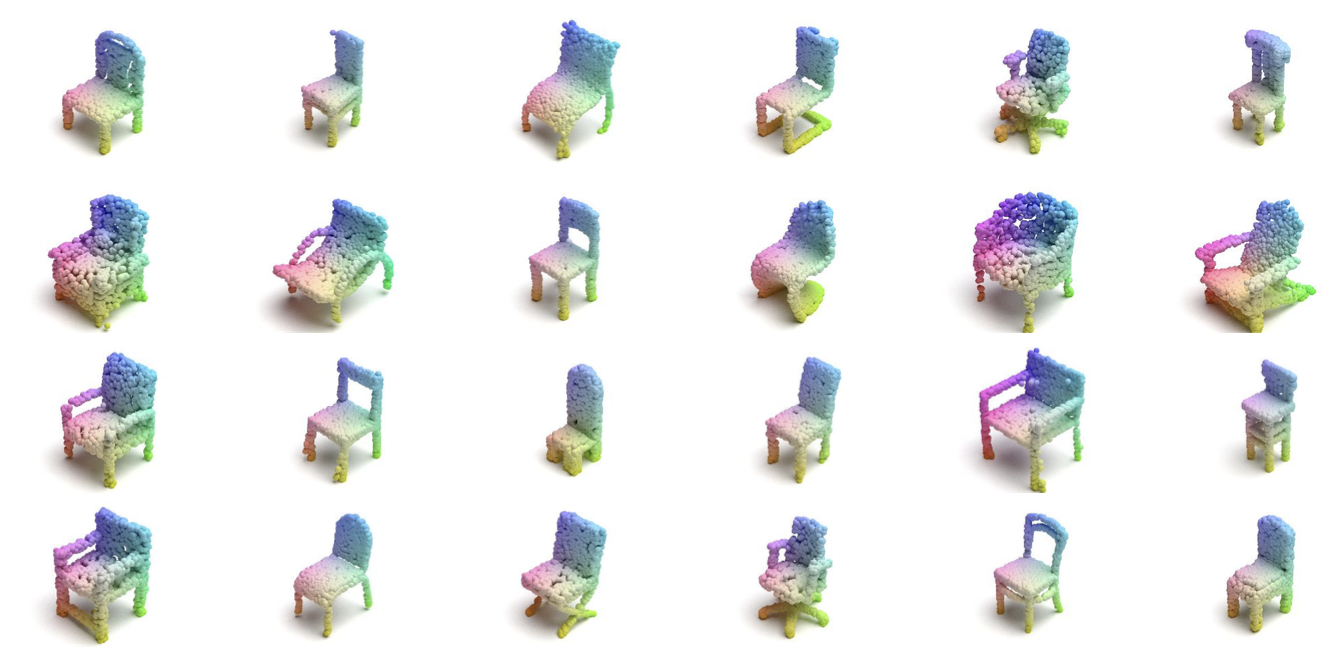

Qualitative visualizations of high-fidelity and diverse 3D point cloud generation.

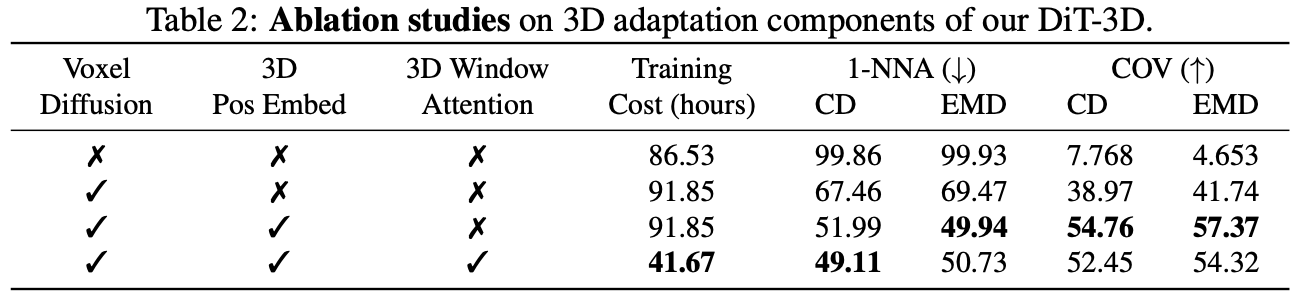

Experimental Analyses

Ablation on 3D Design Components.

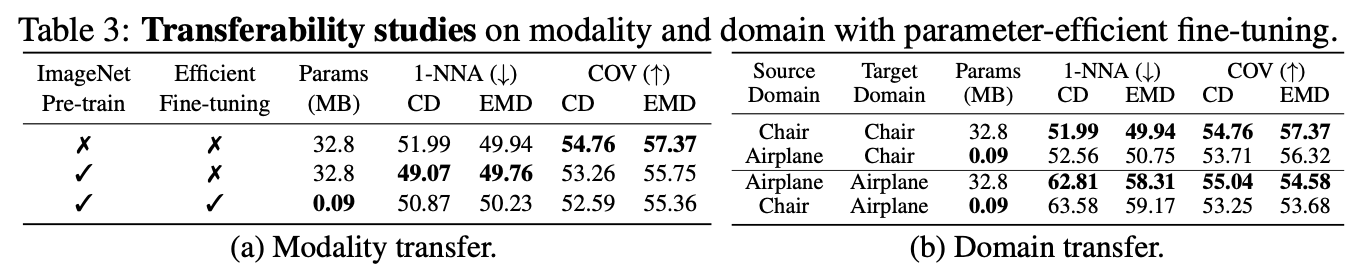

Influence of 2D Pretrain (ImageNet) and Transferability in Domain.

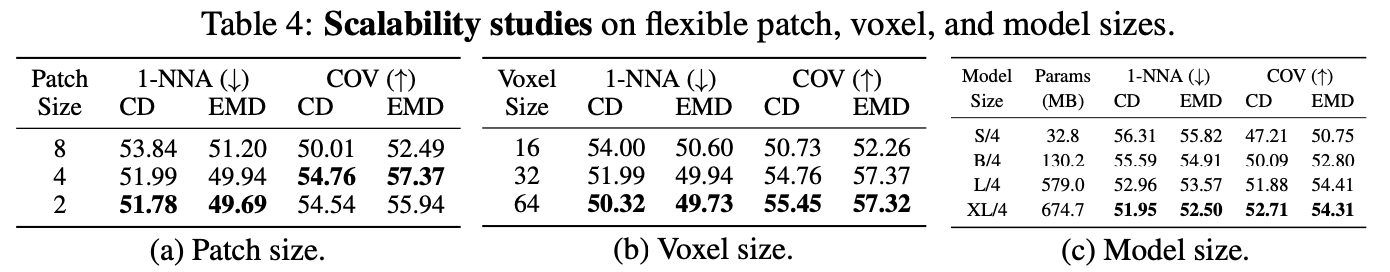

Scaling Patch size, Voxel size and Model Size.

Examples of Diffusion Process

Qualitative visualizations of the diffusion process on Chair, Airplane, Car shape generation.

Citation

@article{mo2023dit3d,

title = {DiT-3D: Exploring Plain Diffusion Transformers for 3D Shape Generation},

author = {Shentong Mo and Enze Xie and Ruihang Chu and Lanqing Hong and Matthias Nießner and Zhenguo Li},

journal = {arXiv preprint arXiv: 2307.01831},

year = {2023}

}

Paper

DiT-3D: Exploring Plain Diffusion Transformers for 3D Shape Generation

Shentong Mo, Enze Xie, Ruihang Chu, Lanqing Hong, Matthias Nießner, Zhenguo Li